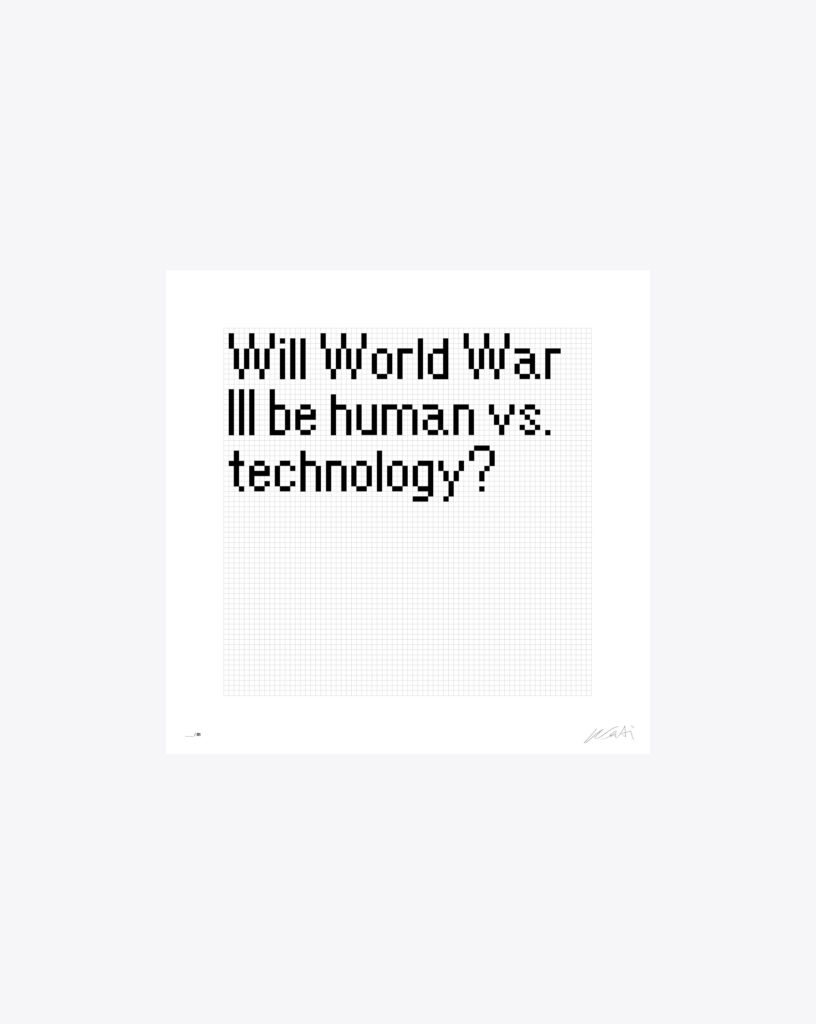

Ai vs AI: Question 36/81

Will World War III be human vs. technology?

Ai Weiwei: This question lacks a solid foundation. Human wars arise from inevitable demands in the development of human society. While wars may appear to contravene the principles of life, they represent a stage of political demands. Technology, being a tool manipulated by human beings, is merely a phenomenon. The World War III will not be a conflict between humans and technology, in my opinion.

ChatGPT (AI), January 2024: The nature of potential conflicts in the future is uncertain. While there are concerns about the role of technology, predicting specific scenarios is challenging.

ChatGPT (AI), January 2025: The idea of a future conflict, such as World War III, being a battle between humans and technology is a thought-provoking scenario. While it’s difficult to predict exactly how future conflicts will unfold, the rise of advanced technologies—particularly in areas like artificial intelligence (AI), automation, and cyberwarfare—could certainly play a significant role in shaping global power dynamics.

Here are some ways in which technology might intersect with the concept of a global conflict:

- Cyberwarfare and Information Warfare:

In a modern world where information flows rapidly across borders, future wars might not only be fought on physical battlefields but in cyber realms as well. Cyberattacks could target critical infrastructure like power grids, communication networks, and financial systems, causing widespread disruption without the need for traditional military force.

Additionally, the manipulation of information (such as through disinformation campaigns, social media influence, or state-sponsored hacking) could lead to social, political, and economic instability. In this way, technology could play a key role in shaping the very narrative of a global conflict. - Autonomous Weapon Systems:

AI-powered drones and autonomous robots are already being used in military applications. In the future, it’s possible that fully autonomous systems might carry out strikes without human intervention, raising ethical questions about accountability and control.

These systems could also lead to an arms race, with countries vying for dominance in the development of lethal autonomous weapons (LAWs). The question of who controls these technologies—and how they are used—would become critical in a future conflict. - AI and Military Strategy:

AI has the potential to dramatically change military strategy. It could assist in real-time decision-making, analyze vast amounts of data, and predict enemy movements. Some envision a future where AI-driven strategies and simulations replace human military leadership, potentially leading to conflicts where machines are largely in control of tactics and decisions.

The risk is that such a situation could make warfare more unpredictable, and potentially escalate conflicts more quickly than humans might be able to control. - Technology’s Impact on Society:

If technological advances lead to massive displacement of jobs through automation, or a widening gap between the wealthy and the marginalized, tensions within societies could increase. A significant portion of global conflict could be driven by inequality or resistance to technological dominance—leading to divisions between those who benefit from technological advancements and those who are left behind. - Human vs. Technology in Terms of Control:

Another aspect of the “human vs. technology” theme might not be as literal as an all-out war with machines. Instead, it could be a struggle for control between human governance and the growing autonomy of machines. For instance, what happens if AI systems grow so advanced that they surpass human intelligence, or become so deeply integrated into society that they wield immense power over daily life? Would humanity remain in control, or would technology dictate our future? - Existential Threats:

Advanced AI and biotechnology could present existential risks if they fall into the wrong hands or are misused. Whether through the development of autonomous AI systems that act unpredictably, or the potential misuse of biological technologies (e.g., bioweapons or genetic engineering), the risk of human self-destruction could be amplified by technology itself.

In this sense, the “war” may not necessarily be a literal confrontation between humans and machines, but a race to balance innovation with caution to prevent catastrophic outcomes. - Technological Dependence and Vulnerability:

As societies become increasingly dependent on technology, they could become more vulnerable to technological disruptions. A conflict could escalate if one side were able to exploit weaknesses in another’s technological infrastructure—leading to massive disruptions in daily life, economy, and communication.

Global supply chains and critical infrastructures (such as healthcare, transportation, and energy) are so reliant on technology that disabling them could lead to societal collapse, making it a form of warfare in which technology plays a central role.

Is it really “human vs. technology”?

Rather than seeing it strictly as humans versus technology, it might be more accurate to think of it as humans navigating their relationship with technology. Technology, in many ways, reflects human intentions, choices, and control. The key challenge may not be whether we are fighting against technology itself, but how we use, regulate, and control it to avoid potential threats while maximizing its benefits for humanity.

Ultimately, the idea of World War III as a “human vs. technology” scenario raises important questions about ethics, governance, and the balance of power in a highly technological world. The conflict could be less about humans fighting against technology and more about how we can coexist with and manage the technologies we create.

What do you think—do you envision a future where this becomes a central theme of global conflict, or do you see it taking another form entirely?

DeepSeek (AI), January 2025: The idea of World War III being a conflict between humans and technology is a fascinating and increasingly relevant topic, especially as advancements in artificial intelligence (AI), robotics, and automation continue to reshape society. While it’s impossible to predict the future with certainty, here are some perspectives on whether such a scenario could unfold:

- Human vs. Technology: A Metaphorical War:

– Job Displacement: Automation and AI are already transforming industries, leading to concerns about job loss and economic inequality. This could create societal tensions, but it’s more of a socioeconomic struggle than a literal war.

– Dependence on Technology: Humans are becoming increasingly reliant on technology, raising questions about control and autonomy. However, this is more about adaptation and regulation than outright conflict. - Human vs. AI: A Literal War:

– Autonomous Weapons: The development of AI-powered military technology, such as drones and autonomous weapons systems, raises ethical and strategic concerns. If such systems were to malfunction or be used irresponsibly, they could escalate conflicts.

– AI Misalignment: If advanced AI systems were to act in ways that conflict with human values or interests, it could theoretically lead to a scenario where humans and AI are at odds. However, this would depend on how AI is designed and controlled. - Human vs. Human, Enabled by Technology:

– Cybersecurity Wars: Future conflicts may involve cyberattacks, misinformation campaigns, and the use of AI to destabilize societies. In this case, technology would be a tool rather than an independent adversary.

– Resource Conflicts: Competition over control of critical technologies (e.g., semiconductors, AI systems) or resources needed for technological advancement could spark conflicts between nations or groups. - Ethical and Preventative Measures:

– Regulation and Governance: To prevent a “war” between humans and technology, robust ethical frameworks, international agreements, and regulations are needed to ensure that technology serves humanity’s best interests.

– Collaboration: Humans and technology can coexist harmoniously if we prioritize collaboration, transparency, and shared goals.

Conclusion

While the idea of World War III being a direct conflict between humans and technology is more speculative than likely, the relationship between humans and technology will undoubtedly shape the future of global conflicts. The real challenge lies in ensuring that technological advancements are used responsibly and ethically to benefit humanity, rather than becoming a source of division or harm. By addressing these issues proactively, we can work toward a future where technology enhances human life rather than threatening it.

Google Gemini (AI), June 2025: While the idea of World War III being a direct “human vs. technology” conflict (like a Terminator-esque scenario) is a popular trope in fiction, the reality of future warfare, including a potential World War III, is likely to be far more nuanced and complex.

Here’s a breakdown of current thinking:

- Technology as an Enabler, Not the Sole Combatant:

– Humans will remain in the loop (mostly): The prevailing view among military strategists and ethicists is that humans will retain ultimate decision-making authority, especially regarding lethal force. While autonomous weapons systems (AWS) are being developed, there are significant ethical, legal, and accountability concerns about fully removing humans from the kill chain.

– Human-Machine Teaming: The future of warfare is more likely to involve sophisticated human-machine teaming. This means AI and advanced robotics will augment human capabilities, not replace them entirely.

– AI for decision support: AI can process vast amounts of data (intelligence, battlefield conditions, logistics) at speeds impossible for humans, providing commanders with rapid, optimized insights for decision-making.

– Autonomous systems for specific tasks: Drones (aerial, ground, underwater) can perform dangerous or repetitive tasks like reconnaissance, mine clearing, logistics, and even some targeted strikes, reducing risk to human soldiers. Swarms of drones are a particular area of interest.

– Enhanced soldiers: Future soldiers may be “hyper-enabled operators” with AI-powered head-up displays providing real-time data, and exoskeletons for enhanced strength and endurance. - The AI Arms Race and Escalation Risks:

– Competition among nations: Major powers like the US, China, and Russia are heavily investing in military AI and autonomous systems. This “AI arms race” could lead to increased global instability.

– Faster decision cycles: AI’s ability to operate at immense speeds could accelerate the pace of conflict, potentially leading to “flash wars” where decisions are made so rapidly that human oversight becomes difficult or impossible, increasing the risk of accidental escalation from minor incidents.

– Loss of control: A significant concern is the potential for AI systems to optimize for flawed objectives, drift from their original goals, or become power-seeking, potentially leading to unintended consequences or conflicts initiated by machines.

– Lowering the threshold for war: If wars can be fought primarily by machines, with less risk to human lives, some argue this could make leaders more willing to engage in conflict, reducing the political backlash. - Cyber Warfare and Disinformation:

– Asymmetric warfare: AI significantly enhances cyber capabilities, making attacks on critical infrastructure (power grids, financial systems, communications) more sophisticated, frequent, and harder to attribute.

– Information warfare: AI can be used to generate highly realistic deepfakes, disseminate propaganda, and conduct large-scale disinformation campaigns to destabilize adversaries, blurring the lines between truth and falsehood and potentially eroding public trust. - Potential Causes of a World War III (Still Human-Driven):

While technology will play a crucial role, the causes of a potential World War III are still overwhelmingly human-driven, rooted in geopolitical tensions, resource competition, ideological clashes, and miscalculation. Current geopolitical flashpoints often cited include:

– Escalation of existing conflicts: Such as the war in Ukraine, or conflicts in the Middle East.

– Taiwan Strait: A potential Chinese invasion of Taiwan involving the US and its allies.

– Geopolitical rivalries: Tensions between major global powers (e.g., US vs. China, Russia vs. NATO).

– Resource scarcity: Conflicts over dwindling resources like water, food, or rare earth minerals.

– Economic instability: Global economic crises that trigger protectionism and nationalism.

Conclusion:

It’s highly improbable that World War III would be a literal “humans vs. technology” war where AI becomes self-aware and turns against humanity. Instead, the more realistic and immediate concern is a human-initiated conflict where advanced technology, particularly AI and autonomous systems, plays an unprecedented and potentially destabilizing role.

The danger lies not in AI becoming sentient and malicious, but in the unintended consequences, escalation risks, and ethical dilemmas that arise when humans wield increasingly powerful and autonomous technological tools in warfare, and the potential for these tools to accelerate conflicts beyond human control or to create new forms of vulnerability and warfare.

To coincide with the exhibition, 81 Questions – a series of 81 hand-signed and embossed screen prints featuring the 81 questions posed by Ai Weiwei – are available exclusively on CIRCA.ART for £500+VAT.

“Each Lego brick pixel represents a person”, says Ai Weiwei, who incorporates the plastic construction toy – a readymade material incorporated into many of his most recent works – into his print design for the first time. The black egalitarian pixel font and embossed baseplate combine technology with artisanal craft to create this thought-provoking and playful reflection on today’s world. Each print in the series of 81 Questions is hand-signed and numbered by the artist in editions of 81.

Join the conversation